Data as a Product in Data Platform (PoC)

Data as a Product: Vision

Each Data Product will require a specification (described here, still under refinement) alongside other defined pieces of descriptive metadata captured at registration.

Data Products:

- May be multi-component, e.g. consisting of multiple tables

- Can consist of structured or unstructured data (or a combination)

- Are controlled and updated by Data Owners.

- Include data metadata

We want to design the Data Product management process around a user-facing API. This API will allow users to register, update, and retrieve information about a Data Product.

The data sent to this API as part of registering or updating a data product will flow through standard Data Lake zones: Landing -> Raw -> Curated.

Note

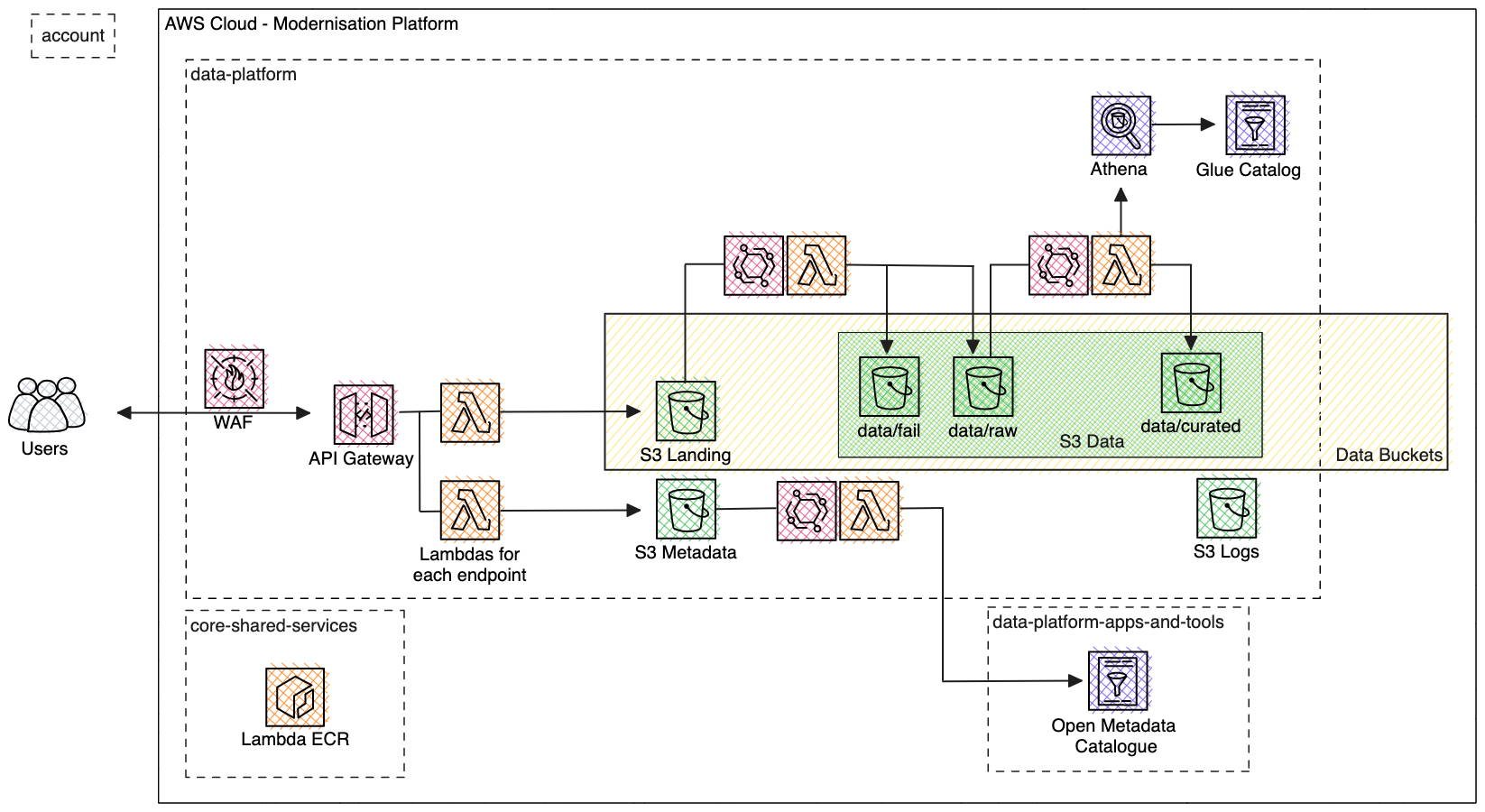

Implementation: The infrastructure will be built in the Data Platform’s Modernisation Platform environment(s).

Data Flow

Users with permissions to manage Data Products may register or amend data products. Registration, and some categories of amendments will involve ingestion of data using our data API. This API is backed by containerised lambdas that carry out each step of the event-driven data processing pipeline. Data will first go to a ‘landing’ bucket ahead of data validation. It is then moved to a ‘raw history’ bucket folder to retain a copy of the data. The arrival of structured data in the raw history folder triggers a ‘curation’ lambda which standardises the file type and data format before placing the data in the finalised ‘curated’ bucket folder. This curated data is the data that is surfaced as the data product, and is registered to the aws Glue catalogue.

As part of the request to register or amend a Data Product, the API user must provide Data Product metadata. This is processed, validated, and stored in a separate location to the data. This then integrates with OpenMetadata to update the metadata in the catalogue to reflect the changes made to the Data Product through this ingestion pipeline.

Each of the pipeline stages outputs standardised logging for audit and debugging purposes. These logs are stored in another restricted-access logging bucket.

Each of the lambda images are stored in a central Elastic Container Registry on the MoJ Modernisation Platform.

Data Ingestion handling

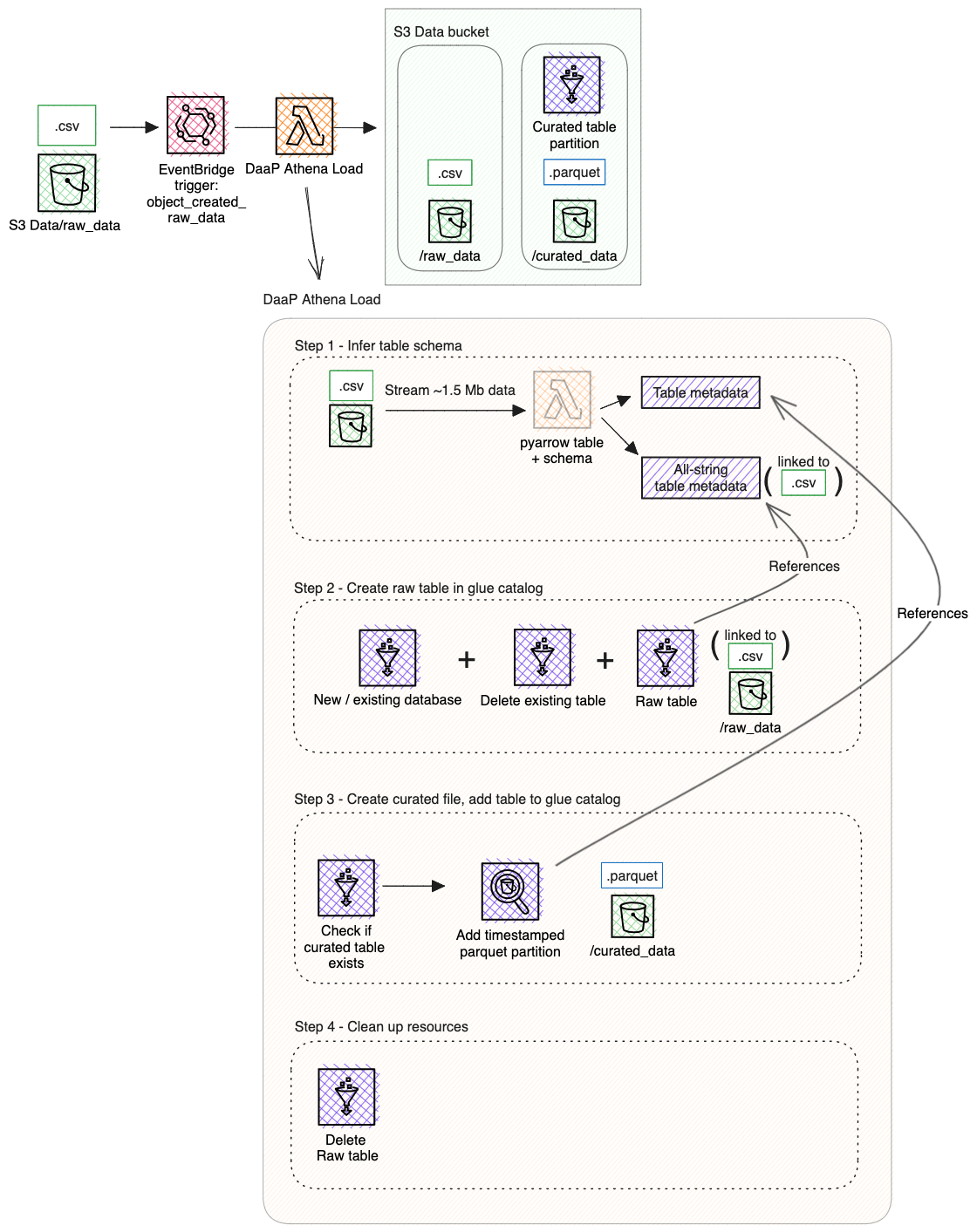

Upon file transfer to the raw_data folder inside the data bucket an aws.s3 Object Created

EventBridge trigger is activated.

This calls the daap-athena-load lambda,

which creates raw and curated tables in the Glue Catalog.

Assumptions and Risks

The following are the assumptions and risks. This will be mitigated eventually.

The data product language will be python which will enable us to run it using Glue.

The code provided by the data product will run without any errors. There is a risk that the code would be error prone. We need to think if there is any unit testing done to check the code provided.

Access control to various tools and users are out of scope for this work.

Scope and Limitations

With this being used as a PoC it has quite a narrow scope and the following limitations:

- Raw data are a full-load snapshot at every upload.

- Raw data are csv format.

- Data producers cannot include transformations of the data.

- A

load_timestamp- used as the partition - is the only column added to the data during the curation step (conversion to parquet). There is no SCD2. - No schema changes can be made once a data product is created, users must create a new product

Notes

Use of aws services

Athena vs. Glue

Athena is cheaper than using Glue for compute as you are charged for amount of data scanned per query ($5 per TB). Cost of lambda execution is also relatively inexpensive at $0.0000083 per second with 512 Mb memory.

Initial testing has shown Athena as compute to be a lot quicker than using a Glue Job for moderately sized data. Further benchmarking is required as scale and complexity increases.

API Gateway

API Gateway is used to host and manage our API endpoints. A user will interact with the API to initiate the product registration and data ingestion processes.

EventBridge

We are using EventBridge to kick off lambdas after each successive step of the data ingestion pipeline.

Lambda

We are using containerised lambdas for each of the processing steps in the data ingestion pipeline.